Abstract

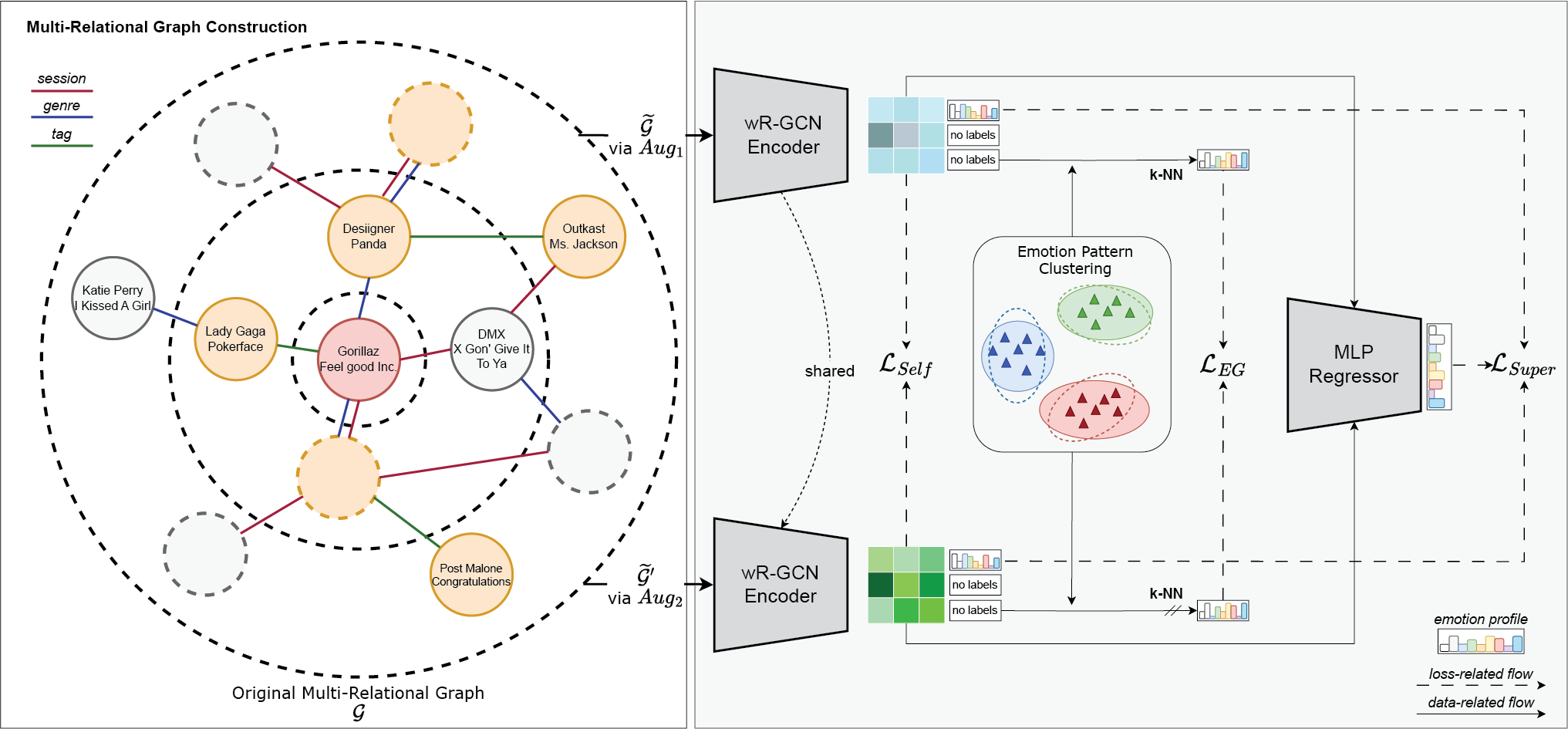

Music emotion recognition (MER) seeks to understand the complex emotional landscapes elicited by music, acknowledging music’s profound social and psychological roles beyond traditional tasks such as genre classification or content similarity. MER relies heavily on high‑quality emotional annotations, which serve as the foundation for training models to recognize emotions. However, collecting these annotations is both complex and costly, leading to limited availability of large‑scale datasets for MER. Recent efforts in MER for automatically extracting emotion have focused on learning track representations in a supervised manner. However, these approaches mainly use simplified emotion models due to limited datasets or a lack of necessity for sophisticated emotion models and ignore hidden inter‑track relations, which are beneficial in a semi‑supervised learning setting. This paper proposes a novel approach to MER by constructing a multi‑relational graph that encapsulates different facets of music. We leverage graph neural networks to model intricate inter‑track relationships and capture structurally induced representations from user data, such as listening histories, genres, and tags. Our model, the semi‑supervised multi‑relational graph neural network for emotion recognition (SRGNN‑Emo), innovates by combining graph‑based modeling with semi‑supervised learning, using rich user data to extract nuanced emotional profiles from music tracks. Through extensive experimentation, SRGNN‑Emo demonstrates significant improvements in R2 and root mean squared error metrics for predicting the intensity of nine continuous emotions (Geneva Emotional Music Scale), demonstrating its superior capability in capturing and predicting complex emotional expressions in music.

Citation

Andreas Peintner,

Marta

Moscati,

Yu Kinoshita,

Richard Vogl,

Peter Knees,

Markus

Schedl,

Hannah Strauss,

Marcel Zenter,

Eva Zangerle

Nuanced Music Emotion Recognition via a Semi-Supervised Multi-Relational Graph Neural Network

Transactions of the International Society for Music Information Retrieval,

8:

140-153, doi:10.5334/tismir.235, 2025.

BibTeX

@Peintner-2025{Peintner2025emotional_rec,

title = {Nuanced Music Emotion Recognition via a Semi-Supervised Multi-Relational Graph Neural Network},

author = {Peintner, Andreas and Moscati, Marta and Kinoshita, Yu and Vogl, Richard and Knees, Peter and Schedl, Markus and Strauss, Hannah and Zenter, Marcel and Zangerle, Eva},

booktitle = {Transactions of the International Society for Music Information Retrieval},

publisher = {Association for Computing Machinery},

doi = {10.5334/tismir.235},

url = {https://transactions.ismir.net/},

volume = {8},

pages = {140-153},

month = {April},

year = {2025}

}