Abstract

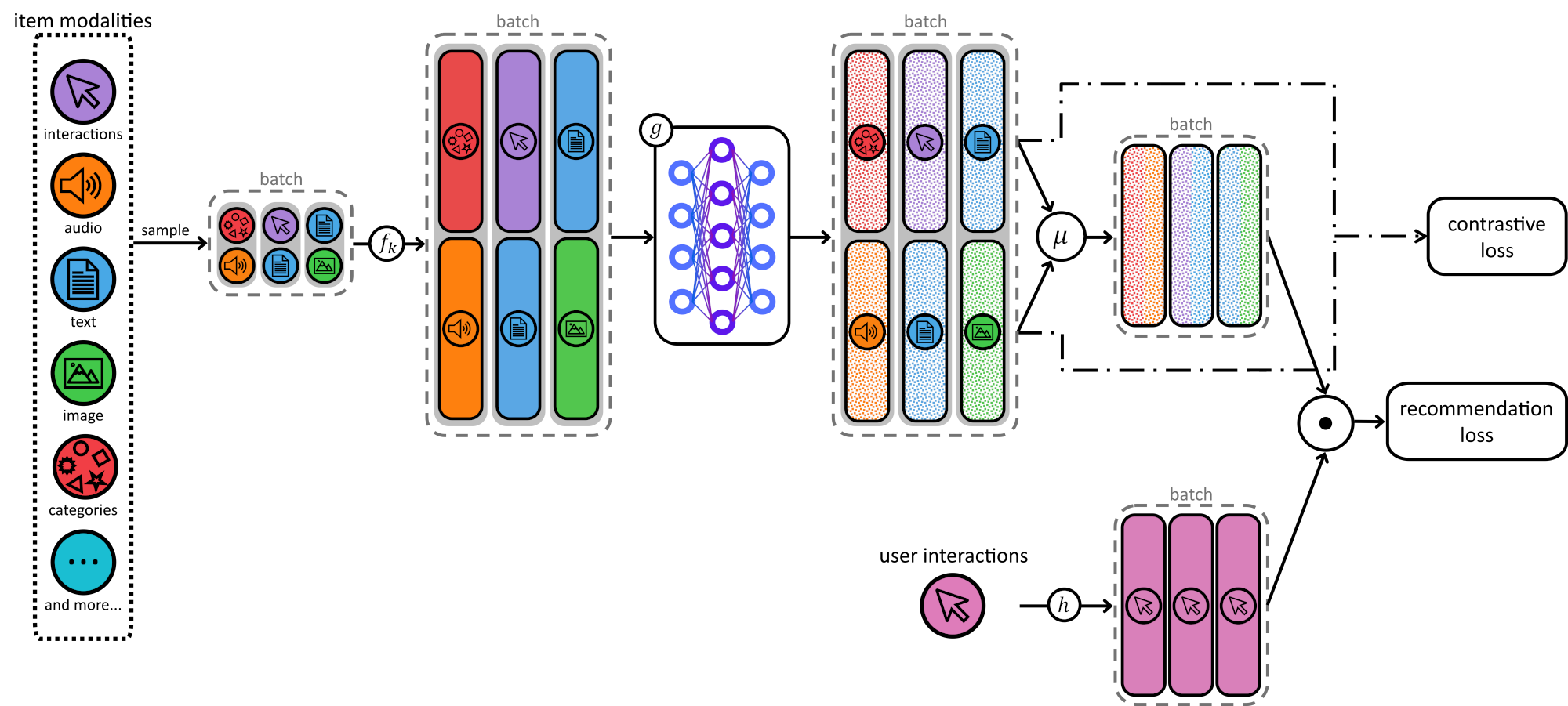

Traditional recommender systems rely on collaborative filtering (CF), using past user–item interactions to help users discover new items in a vast collection. In cold start, i. e., when interaction histories of users or items are not available, content-based recommender systems (CBRSs) use side information instead. Most commonly, user demographics and item descriptions are used for user and item cold start, respectively. Hybrid recommender systems (HRSs) often employ multimodal learning to combine collaborative and user and item side information, which we jointly refer to as modalities. Though HRSs can provide recommendations when some modalities are missing, their quality degrades. In this work, we utilize single-branch neural networks equipped with weight sharing, modality sampling, and contrastive loss to provide accurate recommendations even in missing modality scenarios, including cold start. Compared to multi-branch architectures, the weights of the encoding modules are shared for all modalities; in other words, all modalities are encoded using the same neural network. This, together with the contrastive loss, is essential in reducing the modality gap, while the modality sampling is essential in modeling missing modality during training. Simultaneously leveraging these techniques results in more accurate recommendations. We compare these networks with multi-branch alternatives and conduct extensive experiments on the MovieLens 1M, Music4All-Onion, and Amazon Video Games datasets. Six accuracy-based and four beyond-accuracy-based metrics help assess the recommendation quality for the different training paradigms and their hyperparameters on single- and multi-branch networks in warm-start and missing modality scenarios. We quantitatively and qualitatively study the effects of these different aspects on bridging the modality gap. Our results show that single-branch networks provide competitive recommendation quality in warm start, and significantly better performance in missing modality scenarios. Moreover, our study of modality sampling and contrastive loss on both single- and multi-branch architectures indicates a consistent positive impact on accuracy metrics across all datasets. Overall, the three training paradigms collectively encourage modalities of the same item to be embedded closer together than those of different items, as measured by Euclidean distance and cosine similarity. This results in embeddings that are less distinguishable and more interchangeable, as indicated by a 7-20% drop in modality prediction accuracy. Our full experimental setup, including training and evaluating code for all algorithms, their hyperparameter configurations, and our result analysis notebooks, is available at https://github.com/hcai-mms/single-branch-networks.

Training

Inference

Citation

Christian

Ganhör,

Marta

Moscati,

Anna

Hausberger,

Shah

Nawaz,

Markus

Schedl

Single-Branch Network Architectures to Close the Modality Gap in Multimodal Recommendation

ACM Transactions on Recommender Systems, doi:10.1145/3769430, 2025.

BibTeX

@article{Ganhoer2025TORS_SiBraR,

title = {Single-Branch Network Architectures to Close the Modality Gap in Multimodal Recommendation},

author = {Ganhör, Christian and Moscati, Marta and Hausberger, Anna and Nawaz, Shah and Schedl, Markus},

booktitle = {ACM Transactions on Recommender Systems},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

url = {https://doi.org/10.1145/3769430},

doi = {10.1145/3769430},

year = {2025}

}